Recently my druid overlord and middle manager stopped indexing data.

I tried the basic multiple restarts but it did not help.

The logs of the middle manager revealed the following:

2018-10-02 06:01:37 [main] WARN RemoteTaskActionClient:102 - Exception submitting action for task[index_nom_14days_2018-10-02T06:01:29.633Z] java.io.IOException: Failed to locate service uri at io.druid.indexing.common.actions.RemoteTaskActionClient.submit(RemoteTaskActionClient.java:94) [druid-indexing-service-0.10.0.jar:0.10.0] at io.druid.indexing.common.task.IndexTask.isReady(IndexTask.java:150) [druid-indexing-service-0.10.0.jar:0.10.0] at io.druid.indexing.worker.executor.ExecutorLifecycle.start(ExecutorLifecycle.java:169) [druid-indexing-service-0.10.0.jar:0.10.0] at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_181] at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_181] at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_181] at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_181] at io.druid.java.util.common.lifecycle.Lifecycle$AnnotationBasedHandler.start(Lifecycle.java:364) [java-util-0.10.0.jar:0.10.0] at io.druid.java.util.common.lifecycle.Lifecycle.start(Lifecycle.java:263) [java-util-0.10.0.jar:0.10.0] at io.druid.guice.LifecycleModule$2.start(LifecycleModule.java:156) [druid-api-0.10.0.jar:0.10.0] at io.druid.cli.GuiceRunnable.initLifecycle(GuiceRunnable.java:102) [druid-services-0.10.0.jar:0.10.0] at io.druid.cli.CliPeon.run(CliPeon.java:277) [druid-services-0.10.0.jar:0.10.0] at io.druid.cli.Main.main(Main.java:108) [druid-services-0.10.0.jar:0.10.0] Caused by: io.druid.java.util.common.ISE: Cannot find instance of indexer to talk to! at io.druid.indexing.common.actions.RemoteTaskActionClient.getServiceInstance(RemoteTaskActionClient.java:168) ~[druid-indexing-service-0.10.0.jar:0.10.0] at io.druid.indexing.common.actions.RemoteTaskActionClient.submit(RemoteTaskActionClient.java:89) ~[druid-indexing-service-0.10.0.jar:0.10.0]

The main cause was

“Caused by: io.druid.java.util.common.ISE: Cannot find instance of indexer to talk to!“

Apparently the middle manager and its peons could not seem to find the overlord service for indexing to start and hence all jobs were failing.

Root Cause:

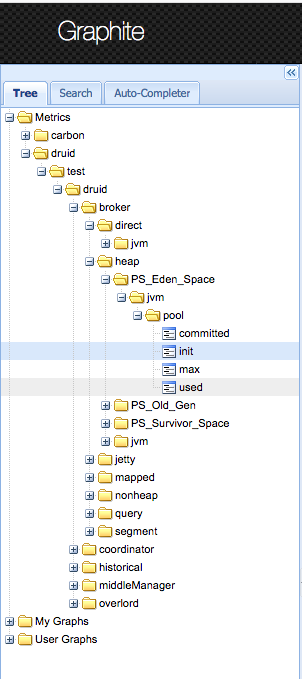

I logged into zookeeper and found the following to entries for Overlord/Coordinator to be empty even though they are up and running.

Since they were empty, the middle manager could not find an indexer to talk to ..!! as the middle manager looks up zookeeper.

[zk: localhost:2181(CONNECTED) 1] ls /druid/discovery/druid:coordinator []

[zk: localhost:2181(CONNECTED) 3] ls /druid/discovery/druid:overlord []

Fix:

assuming that Coordinator runs on Host_A & Overlord runs on Host_B and … do the following:

(1) Generate 2 random ids. 1 for Coordinator & 1 for Overlord

Druid code uses the java code :

System.out.println(java.util.UUID.randomUUID().toString());

you can use any random string.

lets assume you generate

‘45c3697f-414f-48f2-b1bc-b9ab5a0ebbd4‘ for coordinator

&

‘f1babb39-26c1-42cb-ac4a-0eb21ae5d77d‘ for overlord.

(2) log into zookeeper cli and run the following commands:

[zk: localhost:2181(CONNECTED) 3] create /druid/discovery/druid:coordinator/45c3697f-414f-48f2-b1bc-b9ab5a0ebbd4 {"name":"druid:coordinator","id":"45c3697f-414f-48f2-b1bc-b9ab5a0ebbd4","address":"HOST_A_IP","port":8081,"sslPort":null,"payload":null,"registrationTimeUTC":1538459656828,"serviceType":"DYNAMIC","uriSpec":null} [zk: localhost:2181(CONNECTED) 3] create /druid/discovery/druid:overlord/f1babb39-26c1-42cb-ac4a-0eb21ae5d77d {"name":"druid:overlord","id":"f1babb39-26c1-42cb-ac4a-0eb21ae5d77d","address":"HOST_B_IP","port":8090,"sslPort":null,"payload":null,"registrationTimeUTC":1538459763281,"serviceType":"DYNAMIC","uriSpec ":null}

You will notice a registrationTimeUtc field – put in some time u see fit.

This should create the necessary zookeeper nodes for middle manager to lookup

now restart coordinator then overlord then middleManager

Submit a indexing task and it should work.

Additional tips:

In $Druid_Home/conf/druid/middleManager/runtime.properties you may have

druid.indexer.runner.javaOpts=-cp conf/druid/_common:conf/druid/middleManager/:conf/druid/middleManager:lib/* -server -Ddruid.selectors.indexing.serviceName=druid/overlord -Xmx7g -XX:+UseG1GC -XX:MaxGCPauseMillis=100 -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -Dhadoop.mapreduce.job.user.classpath.first=true

and $Druid_Home/conf/druid/middleManager/jvm.config you may have

-Ddruid.selectors.indexing.serviceName=druid/overlord

Hope this helps you.