Theses days many teams have deployed apps via kubernetes. At my current workplace eyeota, we recently moved one of our golang apps to kubernetes.

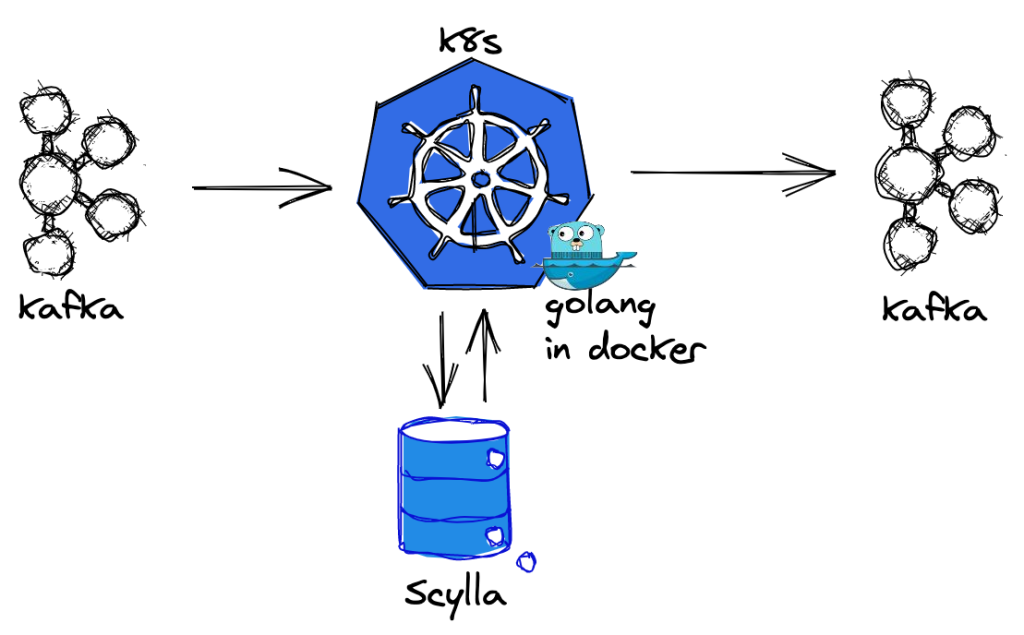

We have a simple golang application which read messages from kafka, enriched them via a scylla lookup & pushed the enriched messages back to kafka.

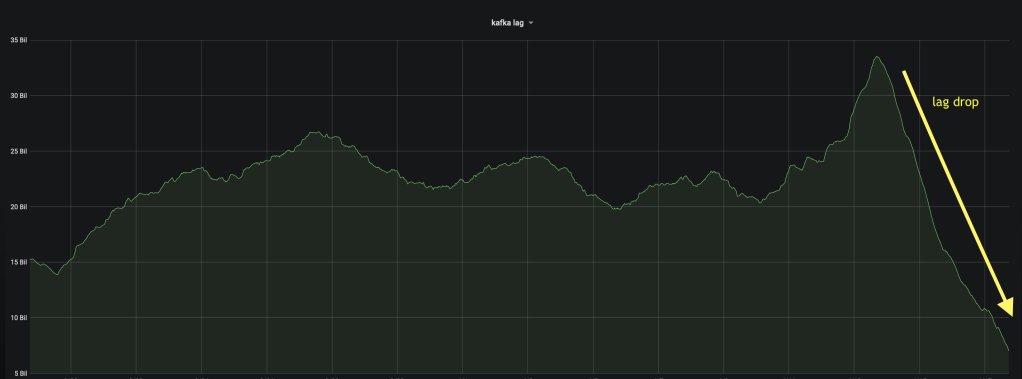

This app had been running well for years, until the move to kubernetes, we had noticed that kafka lag started to grow at a high rate. During this time, our scylla cluster had been expierencing issues & obviously db lookups were taking time, which had contributed to delayed processing/kafka lag.

Our devops team had added more nodes to the scylla cluster in an effort to decrease the db slowness, but the lag continued to grow.

Steps to Mitigate:

- Increasing numbe of pods : we increased from 4 to 6 pods. This did not help much.

- Reduce Db timeouts/retries: This did not help that well either

Further Analysis of Metrics Dashboard:

This time I chose to have a look a the kubernetes dashboard and found that one particular metric was strange

“kubernetes cpu pod throttling” !

When i checked other apps, this metric was quite low but not for my app. It would seem that kubernetes is throttling my app.

Fix

Based on this golang bug (runtime: make GOMAXPROCS cfs-aware on GOOS=linux), it would seem that golang did not respect the quota set for it in kubernetes i.e. golang was setting GOMAXPROCS to a value greater than quota.

We run a lot of go-routines in our code !

To workaround this, we needed to import the ‘uber golang automax-procs‘ lib in our code which make sure that golang was setting GOMAXPROCS according to the kubernetes cpu quota set.

i.e. a simple one line change

import _ "go.uber.org/automaxprocs"Walah, pushed the change and deployed it.

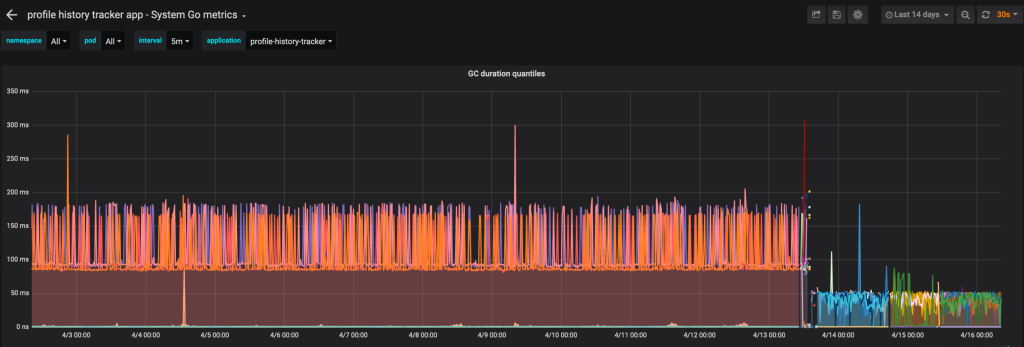

The effects were as follows:

- Pod Throttling metric decreased ⬇️ ✅

2. Golang GC times decreased ⬇️ ✅

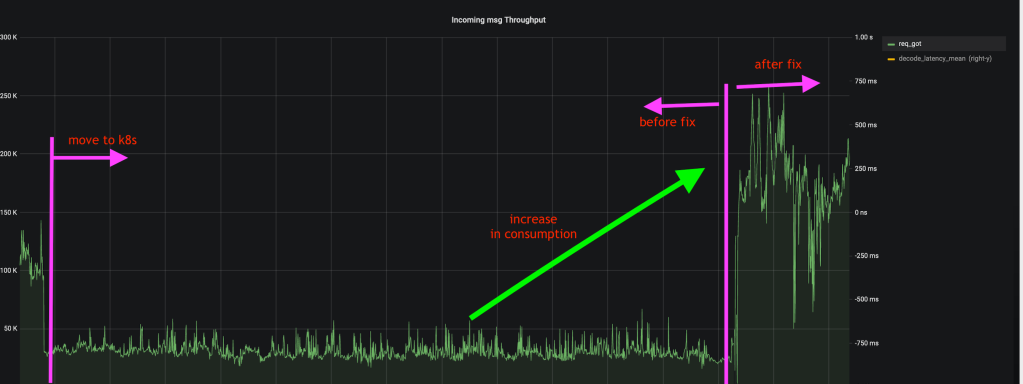

3. Kafka Message Consumption increased ↗️ ✅ & Lag decreasing ↘️✅

After the fix was deployed, we noticed an increase in msg consumption. On increasing the number of pods from 4 to 8 (note: pod quotas remained unchanged), we noticed that consumption increased even more (horizontal scaling was working).

Lag also began to drop nicely 😇.

Recommendation:

If you are using golang, Do check if pod throttling is occuring. You might be happy with our app even if its happening, but if its removed , it can do better 😀

Any feedback is appreciated. Thanks for reading.

ps: golang version 1.12, kubernetes version v1.18.2 on linux