Hi guys,

Some data pipelines typically have a DeDup phase to remove duplicate records.

Well I came across a scenario where

- the data to dedup was < 100Mb

- Our company goes with a Serverless theme

- + we are a startup so fast development is a given

So naturally, I thought of

- Aws Step Functions (to serve as our pipeline) – as input data is < 100MB

- Lambda for each phase of the pipeline

- Dedup

- Quality check

- Transform

- Load to warehouse

Now for the Dedup lambda (Since i have used Spark before) I thought it would be like 3 lines of code and hence easy to implement.

Dataset<Row> inputData = sparkSession.read().json(inputPath);

Dataset<Row> deduped = inputData.dropDuplicates(columnName);

deduped.write().json(outputPath);

//using spark local mode - local[1]I packaged it and ran the lambda … it took > 20 seconds for 10mB of data ☹️

This got me thinking 🤔…. this sounds like overkill

so I decided to write my own code using python (boto3 for s3 interaction + simple hashmap to dedup)

That is a 92.8% decrease 😮 in running time lol

so lets summarize

| Apache Spark (local mode) | Own Solution (python boto3 + hashmap) | |

| code effort | very low (spark does everything) | medium (have to write code to download + dedup + upload to s3) |

| Mem requirements | >=512 MB (spark needs min 512) | <128 MB |

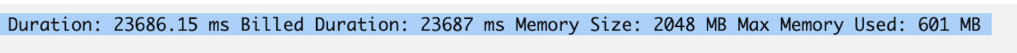

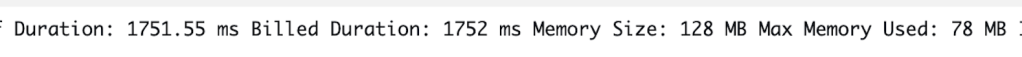

| Mem Used | 600MB | 78 MB |

| Running time Sec | 23.7 ❌ | 1.7 ✅ |

Moral of the Story

- When you think data pipelines, don’t always go to Apache Spark

- Keep in mind the amount of data being processed

- Less code is good for code maintenance but might not be performant

- i.e. Ease of development is a priority but cost comes first.

- Keep an eye on cost $ 💰

- Here we have decreased cost by 92% as lambda bills per running time & memory used