What if you wanted only certain rows (i.e. rows of a particular type) to be only replicated to particular slave db ?

and you were not interested in the rest.

Lets say we have a table with the schema

CREATE TABLE `invoices` (

`id` int(11) PRIMARY KEY NOT NULL,

`type` varchar(10) DEFAULT NULL,

`created_date` datetime DEFAULT NULL,

`status` varchar(10) DEFAULT NULL

) ENGINE=InnoDB

We have different types of invoices like – accounting invoices , warehouse invoices , procurement invoices.

Lets say that we were interested in having

a SLAVE DB where only ‘accounting‘ invoices are only needed and we are not interested in the other types:

What can we do to achieve this selective replication:

One Solution: Use tables with BlackHole (Black Hole engine).

Step 1:

Create another table with same schema as invoices but having engine as BLACKHOLE.

CREATE TABLE `invoices_blackhole` (

`id` int(11) PRIMARY KEY NOT NULL,

`type` varchar(10) DEFAULT NULL,

`created_date` datetime DEFAULT NULL,

`status` varchar(10) DEFAULT NULL

) ENGINE=BLACKHOLE

Step 2 (pseudo code) below:

Change application code as follows: [Assuming all writes go to a master db and slaves are read-only]

—————————–

Old code:

—————————–

string type = ‘accounting’;

Start transaction; insert into invoices (999, type, now(), ‘created’); commit;

or

string type = select type from invoices where id = ‘999’;

Start transaction; update invoices set status = ‘processing’ where id = 999; commit;

—————————–

New Code:

—————————–

string type = ‘accounting’;

Start transaction; insert into invoices (999, type, now(), ‘created’);

if (type == accounting){ insert into invoices_blackhole (999, type, now(), ‘created’); }

commit;

or

string type = select type from invoices where id = ‘999’;

Start transaction; update invoices set status = ‘processing’ where id = 999;

if (type == accounting){ updated invoices_blackhole set status = ‘processing’ where id = 999; }

commit;

/////// do the same for delete too……

Step 3:

On slave: since the create DDL gets propagated to slave:

Now, change engines of invoices & invoices_blackhole to ‘blackhole’ && ‘innodb’ respectively. [that’s correct – reverse them 🙂 ]

Step4:

now starting writing to master and your slave will have only rows with type ‘accounting’. — Tadaaaaa 🙂

mysql selective row replication using blackhole engine

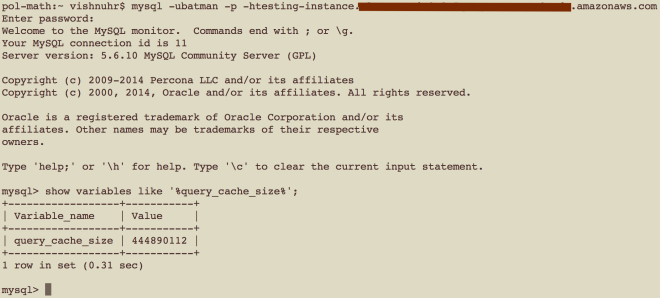

What about Transactions across Blackhole and innodb ?

Ans: In our case, if a rollback is issued , the transaction is not written to binlog => so no worries.

Thank you for reading this post !!

Any suggestions / thoughts are welcome